Partial references:

1, File Layout and File System Performance

2, File system aging¡ªincreasing the relevance of file system benchmarks

3, Workload-Specific File System Benchmarks

Motivation: Some said that Linux file-systems are all fragment-free, during my research I found it's not true.

No one file-system in the world could completely eliminate fragmentation, the fragment rate is different in different circumstances: vary from different structure of the disk file system, different block allocation strategy. The performance hit by fragmentation is different from one file-system to another file-system.

Here comes the test on fragmentation/performance analysis:

(if you want take the test yourself, please see HERE)

The file-list used in the test is HERE

A random sample of test files generated(sample A), which:

0-4KB: 15000

4KB-8KB: 6000

8KB-16KB: 6000

16KB-100KB: 3000

1MB: 10

100MB: 1

Following is a diagram on file size accumulation of the test, it does not include 1MB and 100MB :

The total sample size is about 420MB (This is the result of "du", actual disk space occupied may be greater 10%-20%)

10 randomly selected file-list focuses on 10% of the total file size of the sample(which is called "A10-x", 1<=x<=10, each sample includes 3,000 0-100KB files, two 1MB files and one 100MB file).

10 randomly selected file-list focuses on 20% of the total file size of the sample(which is called "A20-x", 1<=x<=10, each sample includes 6,000 0-100KB files, two 1MB files and one 100MB file).

To ensure the accuracy of the results, tests conducted in several different size partitions. XFS failed in the 512MB(90% full) test due to insufficient disk space.

The test steps are :

1, Create file in accordance with pre-generated random sample as mentioned before.(like "#dd if=/dev/zero of=¡ bs=¡ count=1")

2, According to the pre-produced file-list A10-x A20-x: read 10% or 20% of the files.(Random-Read)

3, Delete the files in the file-list of step 2.(Remove)

4, Shuffel the file-list of step 2, write 10% or 20% of the files.(Write)

5, Read all files.(Sequently-Read)

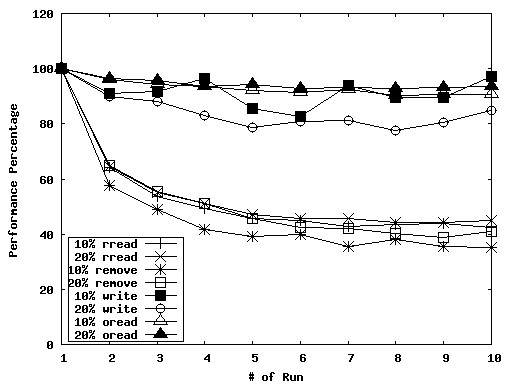

A total 20 of these tests will be performed to calculate the loss of performance due to file-system fragmentation.(Odd-10%, Even-20%) Results followed here:

512MB(90% full) | 768MB(60% full) | 1024MB(40% full) | Defragmentation

ext2-512MB(90% full):

ext3-512MB(90% full):

JFS-512MB(90% full):

Reiser3-512MB(90% full):

Reiser4-512MB(90% full):